Kharma's paper (1)

A Hybrid Evolutionary Algorithm, Utilizing Novelty Search and Local Optimization, Used to Design Convolutional Neural Networks for Handwritten Digit Recognition

Abstract

These scholoars investigate the design of CNNs using Cartesian Genetic programing(CGP), an EA variant. And use Simulate Annealing to local optimize each optima. And this strategy can help to reduce lots of computational effort.

Details

They use threestage evolutionary optimization approach. First stage aims to generate a diverse initial generation of CNNs( using the Novelty Search alogrithm). The second stage involves the evolution of CNN architectures (using the Catesian Genetic Programming). Third stage, they selects the most diverse generation from the previous stage. Finally use the stochastic local search(SLS) algorithm to exploit the local neighbourhoods.

Methods

They evolve two separate populations of CNNs. The first population is begininng with a randomly generated initial generation. The second population yields the initial generation for the evolutionary algorithm. Each designed of their networs combine two subnetwors: a fuly convolution neural network followed by a fully connected(dense) network.

Genetic Encoding

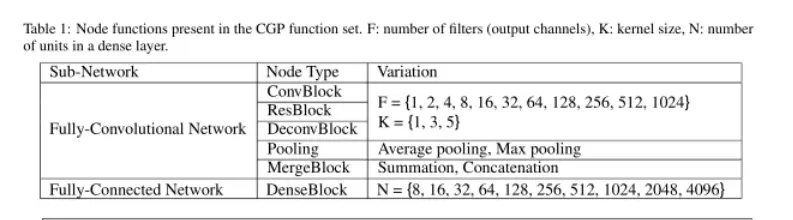

Fellowing the Table1 to design Sub-Network F: number of

filters(output channels) K: Knerel size N: number of units in a dense

layer

Fellowing the Table1 to design Sub-Network F: number of

filters(output channels) K: Knerel size N: number of units in a dense

layer

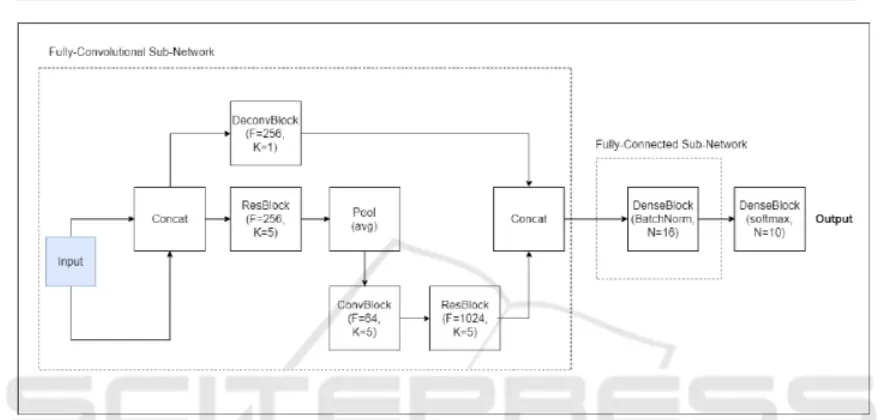

The network structure we can see from this figure.

The network structure we can see from this figure.

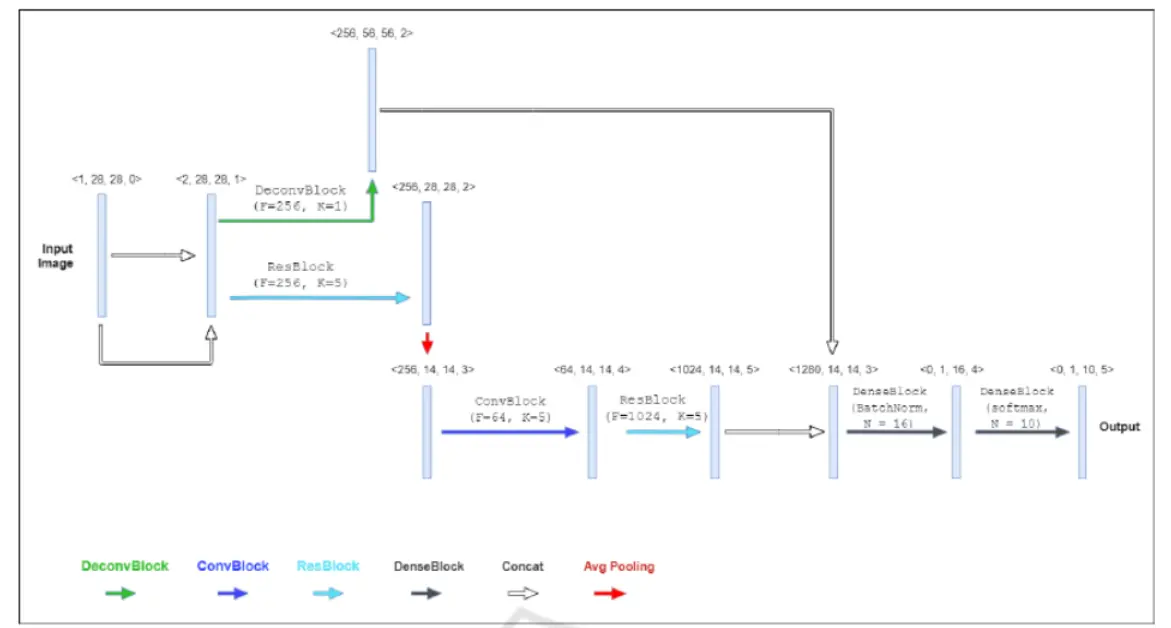

Phenotype of the best performing CNN architecture

obtained by the proposed method, with the vector representation of each

layer indicated. The figure illustrates the functions of each of the

modules represented in the genotype illustrated in Figure 1. The

coloured arrows indicate the various functions: DeconvBlock (green),

ConvBlock (blue), ResBlock (cyan), DenseBlock (dark gray), Concatenation

(white), and Average Pooling (red).

Phenotype of the best performing CNN architecture

obtained by the proposed method, with the vector representation of each

layer indicated. The figure illustrates the functions of each of the

modules represented in the genotype illustrated in Figure 1. The

coloured arrows indicate the various functions: DeconvBlock (green),

ConvBlock (blue), ResBlock (cyan), DenseBlock (dark gray), Concatenation

(white), and Average Pooling (red).

They mention the novelty score to represent the effect of the

algorithm.

Local Optimization

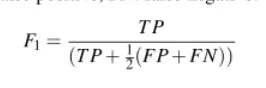

The whole algorithm base on the F1 score and novelty score to select the individuals which are determined to be located near or at local optima.

Thus every architecture has an evalution metric and a bovelty metric, the sampling of local optima can be treated as a multi-objective optimization problem. Then they use the NSGA-2 algorithm to reduce the time-complexity or/ and improved the convergence to the true Pareto Optimal front.

Then the solution found by NSGA-2 will be fed into a local search optimizer. They chose Simulated annealing(which can help to skip some local optima) as the local optimization algorithm.

Experimental Setting

They split MNIST data-set into a training set of 50000 images and held-out validation set of 10000 images. Image pixel values are normalized by dividing each value by 255.

Result

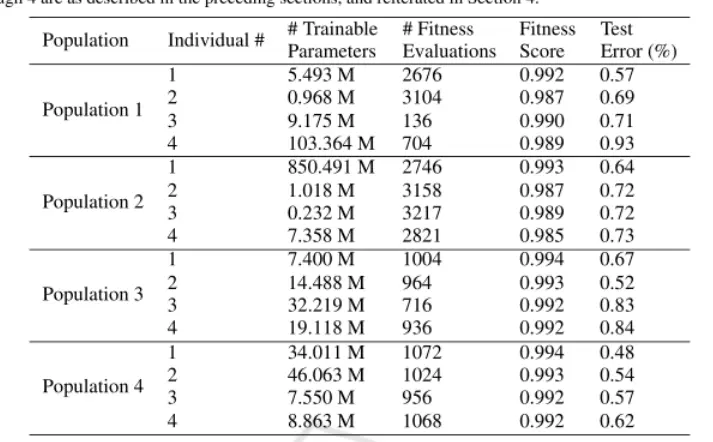

1. Population1: the population obtained

using the standard evolutionary algorithm 2. Population 2: the

population obtained using the evolutionary alogrithm with Novelty search

initialization. 3. Population 3: the population obtained from the

standard evolutionary algorithm and subsequent local optimization via

Simulated Annealing. 4. Population 4: the population obtained from the

evolutionary algorithm with Novelty Search initialization and subsequent

local optimization via Simulated Annealing.

1. Population1: the population obtained

using the standard evolutionary algorithm 2. Population 2: the

population obtained using the evolutionary alogrithm with Novelty search

initialization. 3. Population 3: the population obtained from the

standard evolutionary algorithm and subsequent local optimization via

Simulated Annealing. 4. Population 4: the population obtained from the

evolutionary algorithm with Novelty Search initialization and subsequent

local optimization via Simulated Annealing.

Conclusions

Scholars investigated the use of a three-stage CGP-based hybrid evolutionary algorithm(EA) for the training of CNNs. They demostrat how the final stage of the proposed methodology (simulated annealing) is able to optimize the potential locally optimal individuals found in previous stages, and do so more efficiently than a nor-hybridized EA. And use the novelty as an objective has the effect of reducing the variance of recognition errors by an order of magnitude compared to the pure evolutionary approach.